Luna Translator and Sugoi LLM 14B/32B

Easy method to Access via Luna UI

- First, make sure the model is running. For example, run it through LM Studio or any local GGUF loader like Ollama.

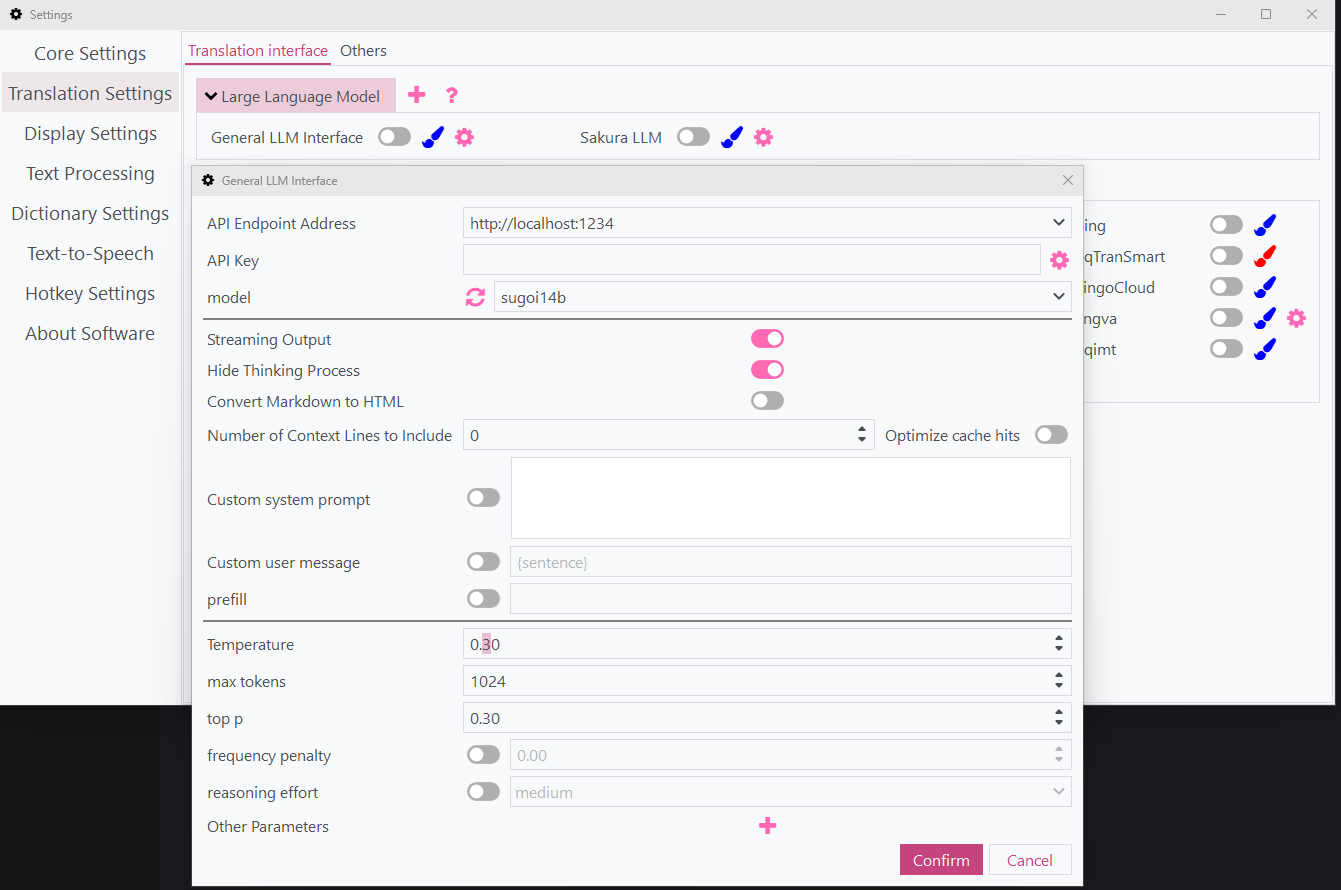

- On LunaTranslator, go to: Translation Settings → Translation Interface → Large Language Model.

- Edit the General LLM Interface.

- Add LM Studio server and port (e.g.,

http://localhost:1234). - Click on the refresh symbol and choose the model from the list.

Done,

This method allows you to use the model directly from Luna's UI without needing to write or modify any scripts.

Alternative method through script for power user

Configuration

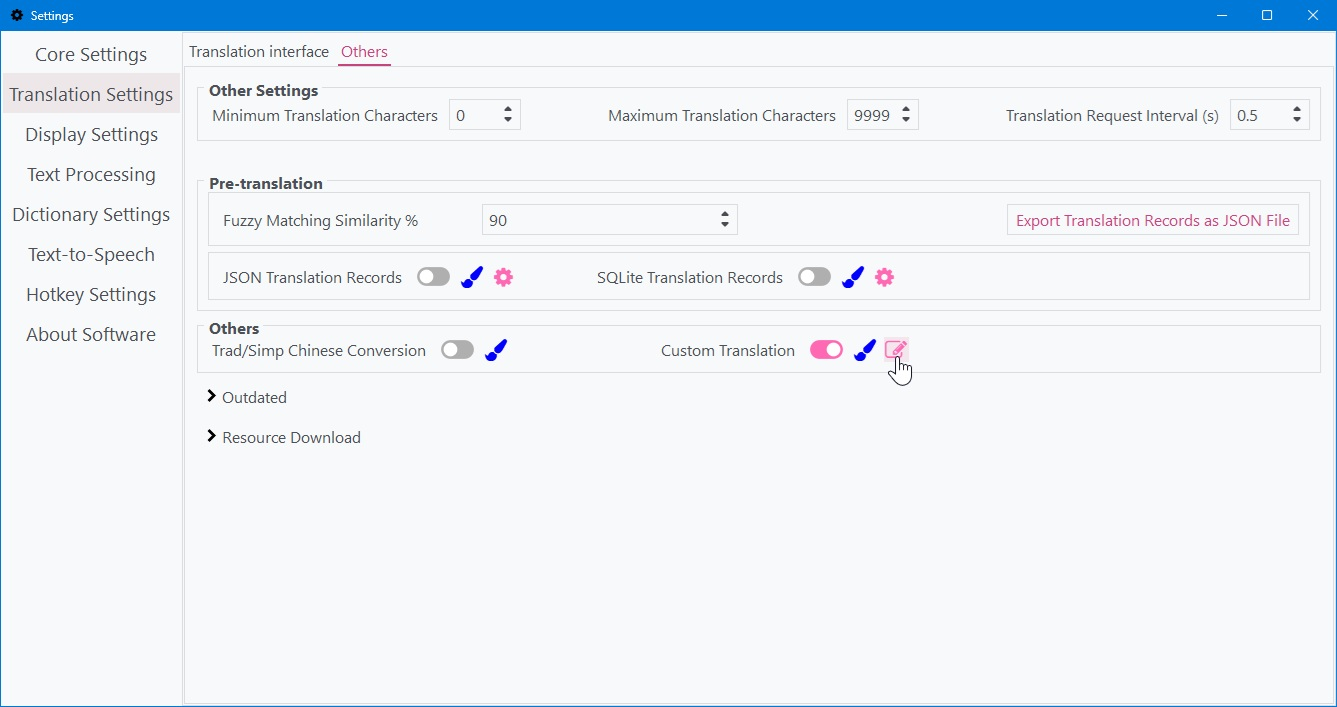

- Find setting for custom translation, might be in different place in different version of LunaTranslator. For version 10.5.4 its under Others tab.

- Enable custom translation and click on edit. Paste the template below and restrart LunaTranslator:

Note: Don't forget to disable other translator.

import requests

import json

import time

from translator.basetranslator import basetrans

from traceback import print_exc

class TS(basetrans):

def translate(self, query):

usingstream = True

url = "http://localhost:11434/v1/chat/completions" # or 1234 or 5000

headers = {"Content-Type": "application/json"}

data = {

"model": "sugoi14b",

"messages": [

{"role": "system", "content": "You are a translator"},

{"role": "user", "content": query}

],

"max_tokens": 4096,

"n": 1,

"top_p": 0.9,

"temperature": 0.3,

"stream": usingstream

}

response = requests.post(url, headers=headers, json=data, stream=usingstream)

if usingstream:

message = ""

for chunk in response.iter_lines():

response_data = chunk.decode("utf-8").strip()

if not response_data:

continue

try:

json_data = json.loads(response_data.lstrip("data: ").strip())

if json_data["choices"][0].get("finish_reason") is not None:

break

msg = json_data["choices"][0]["delta"].get("content", "")

for char in msg:

yield char

time.sleep(0.02)

message += msg

except Exception as e:

print_exc()

raise Exception(f"Error processing response: {response_data}, Error: {str(e)}")

else:

try:

message = (

response.json()["choices"][0]["message"]["content"]

.replace("\n\n", "\n")

.strip()

)

yield message

except Exception as e:

raise Exception(f"Error processing response: {response.text}, Error: {str(e)}")Note: Streaming output works but may feel slow with very long text because of smoothing step for the animation.