How to use any LLM translators with Sugoi Toolkit

Starting from V11, we now have support for adding almost all kinds LLM models you can think of. This is achieved by leveraging a package called LiteLLM.

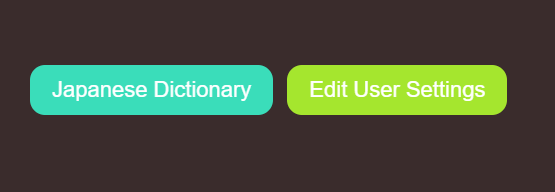

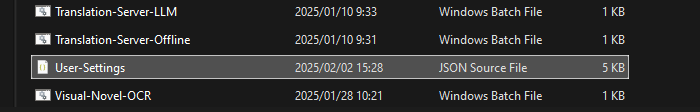

Start by opening the user config. There are two ways:

- Simple way: click "Edit User Settings" on the menu

- Technical way: Find User-Settings.json inside the Code folder and open it with an IDE or text editor

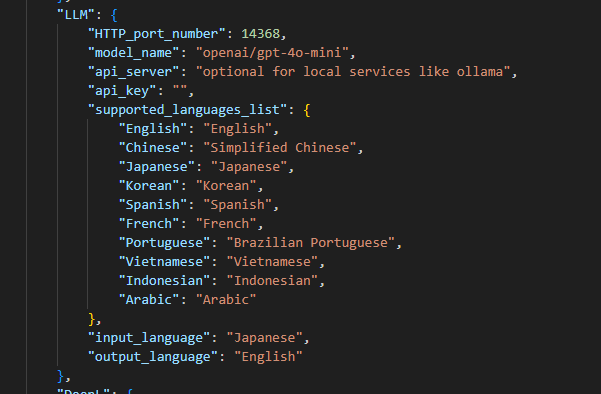

There are two main types of LLM. Cloud LLM like ChatGPT and local LLM like LLama. For the cloud LLM, you NEED to give an "api_key". While for local LLM, you NEED to fill in "api_server"

Here are some examples of "model_name" from different providers:

- OpenAI: "openai/gpt-4o"

- Anthropic: "anthropic/claude-3-sonnet-20240229"

- Ollama: "ollama/llama2"

- Openrouter: "openrouter/google/palm-2-chat-bison"

- Gemini: "gemini/gemini-pro"

- DeepSeek: "deepseek/deepseek-chat"

Here are some examples of "model_name" from local:

- LM studio: "lm_studio/llama2"

- Ollama: "ollama/llama2"

- Oobabooga: "oobabooga/llma2"

*Note important lm_studio/ tells LiteLLM which backend driver to use here LM Studio local serverYou can find more models inside this link.

"api_server" on LLM user-settings is the same as "api_base" on LiteLLM documentation. Btw, many translators don't require "api_server" at all so you can leave it blank if you don't see "api_base" in LiteLLM documentation.

My recommendation is to get an Openrouter api key as you can access literally every AI models out there.